About a month ago, I was blessed with happening upon a BuzzFeed article about what an AI thinks Europeans think Americans look like. There was one AI-generated image for each state, and some of them were pretty wild. I would go as far as to say some of them were even insulting.

For starters, they were obviously filled with stereotypes, most of which were probably gross and untrue, but some of which I found pretty funny. For example, there was the New Mexico picture, which was just a bunch of aliens, most likely due to Area-51 rumors that were especially popular in 2019. There was also the one from Oklahoma, which was clearly just based on the musical, which began showing in 1943.

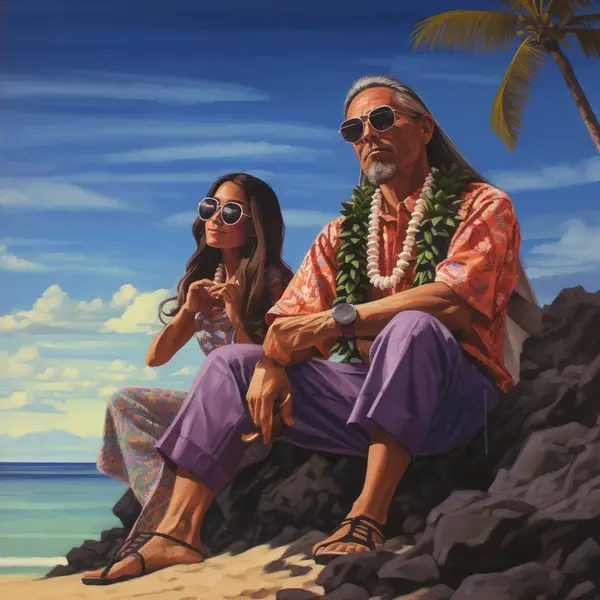

Others that were clearly based on a really small group or one figure from the st ate include Virginia, California, and Hawaii, which were portrayed as a person looking like George Washington, a Hollywood star, and beach-going people wearing leis respectively.

One image that I found particularly perplexing was that of West Virginia. It featured a man in overalls with gray hair and a long beard, ostensibly boxing some form of nearly bipedal wild boar-like creature. I do not know where the AI got this idea – the only reference I have ever heard to West Virginia is John Denver’s Country Roads, if I’m being honest.

Some pictures that are likely more insulting are those from Alabama, Mississippi, New Hampshire, and New Jersey, the latter two of which feature people spilling pasta all over themselves. The picture for Mississippi shows what appears to be a church congregation with a bunch of screaming people, who may or may not be doing the fascist salute. Alabama shows an older, unkempt man, who is balding, and missing teeth, looking into the camera with a crazed look in his eyes.

Discrimination is one of the top issues when it comes to artificial intelligence. The neural net can only draw its “intelligence” from the sources it is given, so any form of bias or political/social leaning in the sources it is given will alter its interpretation of reality. Additionally, a prompt such as “what people in Europe think about Americans” is already asking for stereotypes, which will obviously exacerbate pre-existing issues.

This discrimination is so prevalent and troubling that it has caught the attention of the United Nations Human Rights Office of the High Commissioner. A report filed on the part of the office has found that an especially troubling manifestation of this bias is in experimental predictive policing.

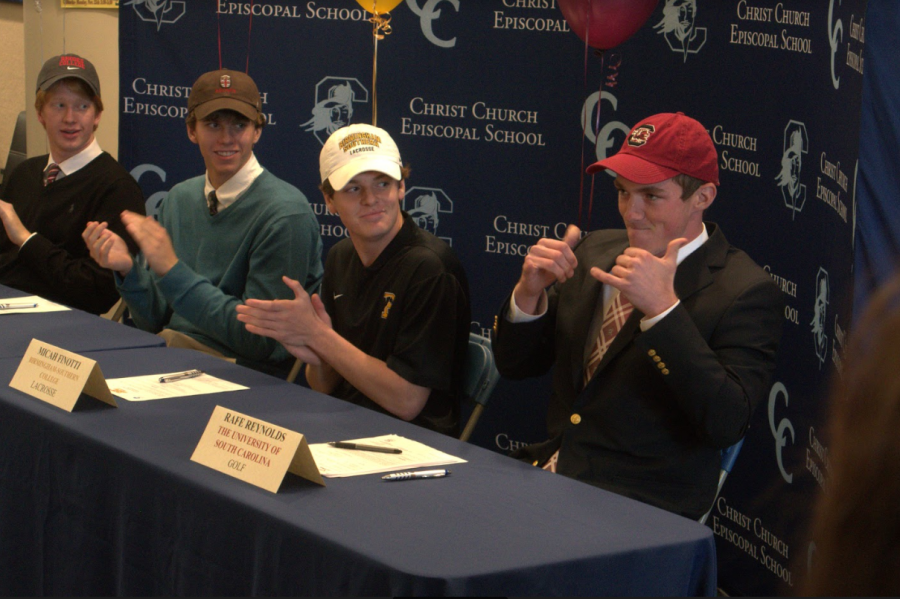

Members of the Christ Church community seem to have an overwhelmingly positive opinion of generative A.I. When asked his opinion on A.I. images, senior Nathaniel Jakubowicz commented that “A.I. is sick.”

Jakubowicz was unaware of the potential discrimination present in the technology, as were other seniors I asked.

Another issue commonly raised in discussions about generative A.I. is the process used to obtain the materials necessary for A.I. supporting computer chips is very damaging to the environment, per Yale School of the Environment.